Your cart is currently empty!

Artificial Intelligence (AI) is increasingly important to modern technology, powering search engines and generative tools like ChatGPT.

These technologies use “tokens”, the fundamental data units for machines to process and understand language. This guide covers the essential information and provides a method for estimating tokens with word count.

What are Tokens in AI?

In AI, particularly in the field of Natural Language processing (NLP), a token is a basic, indivisible unit of data. It’s the smallest piece of text that carries meaning and can be analyzed independently.

AI tools tokenize text to understand it. Tokenization is the process of converting a sequence of characters into a sequence of tokens, which could be words, phrases, symbols, or any element of text that the AI needs to process.

This procedure is fundamental for preparing data for further processing, such as parsing, stemming, or feeding into machine learning models for tasks like translation, sentiment analysis, and more. Tokens allow AI systems to break down and interpret human language in a structured and analyzable form.

Simplified Example

Imagine you have a giant box of LEGOs, and you’re trying to build something cool. 😎

When computers try to understand and use human language, a “token” is like a single LEGO piece from that box.

When a computer reads sentences, it breaks them down into smaller pieces. These are tokens, like picking out specific LEGO pieces to build a model.

With the sentence “The plant is green”, each word is a token the computer uses to understand the sentence, making it 4 tokens.

What Qualifies as a Token?

In AI and specifically in NLP, a token can qualify as any piece of text treated as a single unit for analysis. This can be a word, a punctuation mark, a number, or even a symbol.

The definition of what qualifies as a token depends on the task at hand and how the text is being processed or tokenized.

Types of Tokens

While what qualifies as a token can depend, these are standard units:

- Words: In many cases, tokens are individual words.

- Punctuation: Punctuation marks can also be tokens, especially if they’re important for understanding the structure or meaning of a sentence.

- Characters: In some approaches, especially for certain languages or specific applications, each character, including letters, numbers, and symbols, can be considered a token.

- Subwords or Morphemes: For languages where words can be very complex (Finnish, Turkish, German) or for handling unfamiliar words, subwords or morphemes (the smallest meaningful units in language) might be used as tokens.

Ultimately, a token is defined by how the text is split during the tokenization process, which prepares the text for further processing or analysis by AI models. That’s why it’s easier to get a better understanding with tools for calculating tokens.

Tokenization Calculation Examples

To visualize the tokenization process, let’s walk through some examples of how they’re calculated using OpenAI’s tokenizer, a helpful tool for measuring tokens.

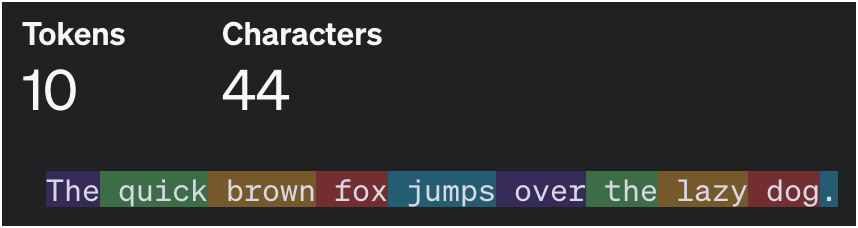

For instance, the sentence “The quick brown fox jumps over the lazy dog.” is segmented into 10 tokens:

For this sentence, each word is only worth one token, and the period at the end is also worth one token.

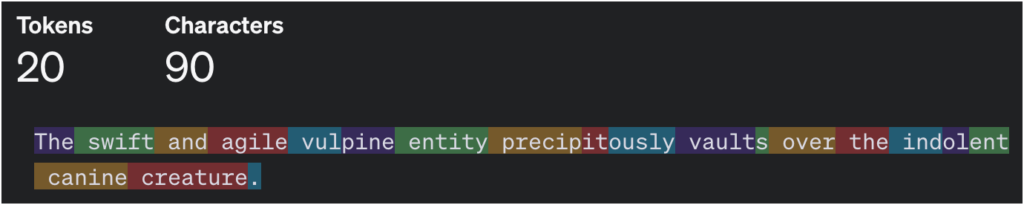

Now let’s calculate, “The swift and agile vulpine entity precipitously vaults over the indolent canine creature.”, a more complicated version:

Notice how this is tokenized differently. Some words like “The” and “swift” are only worth one token, but words like “precipitously” and “vaults” are worth multiple.

How to Estimate Tokens by Word Count

While you can paste text into tokenizer tools, there are more straightforward ways of thinking about them with word count. This section details a method.

Token Approximations

Tokens have a few rules of thumb for approximating them with text word counts:

| Approximate Tokens | Text Length |

|---|---|

| 1 | 4 characters (most common) |

| 1 | 3/4 word |

| 30 | 1-2 sentences |

| 100 | 75 words |

| 100 | 1 paragraph |

| 2048 | 1,500 words |

These can be applied in many ways to get accurate estimates, especially if you combine them with the average metrics.

Instead of doing complicated math based on the usual number of words in an English sentence, we’ll use a generalized formula, which is easier.

Word Count to Token Estimation Formula

For a super straightforward way to estimate tokens to word count, you can use this:

Tokens = Words + 25%This formula is based on the 1 to 3/4 word rule of thumb and matches most tokenizer calculations. Let’s math it out for a hypothetical text that’s 1000 words:

1,000 words * 1.25 = 1,250 tokensThis method gives you a rough estimation that’s easy to remember and communicate, suitable for most cases where you need a quick token count.

Tokens and Context Windows

Context windows play a significant role in how AI processes tokens. They determine the AI’s focus, dictating how many tokens around a given point the AI should consider.

The size of these windows can significantly affect the quality of AI’s understanding and output. For example, a larger context window allows the AI to consider more surrounding information, potentially leading to more accurate responses.

Most people shouldn’t worry about context window size, as the top generative AI tools have long context windows. Tools like ChatGPT, Google Gemini, and Claude should be able to handle at least a small novel of information.

Bottom Line

Tokens are like the ABCs for robots in understanding human language. They help break down language into pieces small enough for AI to work with, making it possible for computer models to grasp the context and help with tasks. 🌏